-

Zash

mod_smacks did have such limits for a short time, but they caused exactly this problem and were then removed until someone can come up with a better way to deal with it

-

Ge0rG

was that the thing that made my server explode due to an unbound smacks queue? ;)

-

Zash

I got the impression that yesterdays discussion was about the opposite problem, killing the session once the queue is too large

-

Zash

So, no, not that issue.

-

lovetox

it turned out the user which reported that problem had a queue size of 1000

-

Ge0rG

what lovetox describes sounds like a case of too much burst traffic, not of socket synchronization issues

-

lovetox

the current ejabberd default is much higher

-

lovetox

but a few versions ago it was 1000

-

Ge0rG

if you join a dozen MUCs at the same time, you might well run into a 1000 stanza limit

-

lovetox

1000 is like nothing

-

lovetox

you cant even join one irc room like #ubuntu or #python

-

Ge0rG

lovetox: only join MUCs once at a time ;)

-

lovetox

you get instantly disconnected

-

Ge0rG

Matrix HQ

-

Ge0rG

unless the bridge is down ;)

-

lovetox

the current ejabberd default is 5000

-

lovetox

which until now works ok

-

Ge0rG

I'm sure the Matrix HQ has more users. But maybe it's slow enough in pushing them over the bridge that you can fetch them from your server before you are killed

-

Kev

Ge0rG: Oh, you're suggesting that it's a kill based on timing out an ack because the server is ignoring that it's reading stuff from the socket that's acking old stanzas, rather than timing out on data not being received?

-

Kev

That seems plausible.

-

Ge0rG

Kev: I'm not sure it has to do with the server's reading side of the c2s socket at all

- Holger <- still trying to parse that sentence :-)

-

Zash

Dunno how ejabberd works but that old mod_smacks version just killed the session once it hit n queued stanzas.

-

Ge0rG

Holger: I'm not sure I underestood it either

-

Ge0rG

Kev: I think it's about N stanzas suddenly arriving for a client, with N being larger than the maximum queue size

-

Holger

Zash: That's how ejabberd works. Yes that's problematic, but doing nothing is even more so, and so far I see no better solution.

-

Kev

Ah. I understand now, yes.

-

Ge0rG

Holger: you could add a time-based component to that, i.e. allow short bursts to exceed the limit

-

Ge0rG

give the client a chance to consume the burst and to ack it

-

Holger

I mean if you do nothing it's absolutely trivial to OOM-kill the entire server.

-

Ge0rG

Holger: BTDT

-

Zash

Ge0rG: Wasn't that a feedback loop tho?

-

Ge0rG

Zash: yes, but a queue limit would have prevented it

-

Kev

It's not clear to me how you solve that problem reasonably.

-

Ge0rG

Keep an eye on the largest known MUCs, and make the limit slightly larger than the sum of the top 5 room occupants

-

Ge0rG

And by MUCs, I also mean bridged rooms of any sort

-

Holger

Get rid of stream management :-)

-

Zash

Get rid of the queue

-

Ge0rG

Get rid of clients

-

Kev

I think this is only related to stream management, no? You end up with a queue somewhere?

-

Zash

Yes! Only servers!

-

jonas’

Zash, peer to peer?

-

Zash

NOOOOOOOO

-

Ge0rG

Holger, Zash: we could implement per-JID s2s backpressure

-

Zash

Well no, bu tyes

-

Holger

Kev: You end up with stored MAM messages.

-

Ge0rG

s2s 0198, but scoped to individual JIDs

-

Ge0rG

also that old revision that allowed to request throttling from the remote end

-

Zash

You could make it so that resumption is not possible if there's more unacked stanzas than a (smaller) queue size

-

Zash

At some point it's going to be just as expensive to start over with a fresh session

-

Ge0rG

Zash: a client that auto-joins a big MUC on connect will surely cope with such invisible limits

-

Holger

Where you obviously might want to implement some sort of disk storage quota, but that's less likely to be too small for clients to cope. Also the burst is often just presence stanzas, which we might be able to reduce/avoid some way.

-

Zash

Soooo, presence based MUC is the problem yet again

-

Holger

Anyway, until you guys fixed all these things for me, I'll want to have a queue size limit :-)

-

Zash

I remember discussing MUC optimizations, like skipping most initial presence for large channels

-

Ge0rG

we need incremental presence updates.

-

Holger

ejabberd's room config has an "omit that presence crap altogether" knob. I think p1 customers usually press that and then things suddenly work.

-

eta

isn't there a XEP for room presence list deltas

-

eta

I also don't enjoy getting megabytes of presence upon joining all the MUCs

-

Zash

eta: Yeah, XEP-0436 MUC presence versioning

-

eta

does anyone plan on implementing it?

-

Zash

I suspect someone is. Not me tho, not right now.

-

Zash

Having experimented with presence deduplication, I got the feeling that every single presence stanza is unique, making pretty large✎ -

Zash

Having experimented with presence deduplication, I got the feeling that every single presence stanza is unique, making deltas* pretty large ✏

-

eta

oh gods

-

Zash

And given the rate of presence updates in the kind of MUC where you'd want optimizations... not sure how much deltas will help.\

-

Holger

Yeah I was wondering about the effectiveness for large rooms as well.

-

Zash

Just recording every presence update and replaying it like MAM sure won't do. Actual diff will be better, but will it be enough?

-

Zash

Would be nice to have some kind of numbers

-

Ge0rG

So we need to split presence into "room membership updates" and "live user status updates"?

-

Zash

MIX?

-

Zash

Affiliation updates and quitjoins is easy enough to separate

-

Ge0rG

and then we end up with matrix-style rooms, and some clients joining and leaving the membership all the time

-

Zash

So we have affiliations, currently present nicknames (ie roles) and presence updates

-

Zash

I've been thinking along the lines of that early CSI presence optimizer, where you'd only send presence for "active users" (spoke recently or somesuch). Would be neat to have a summary-ish stanza saying "I just sent you n out of m presences"

-

Zash

You could also ignore pure presence updates from unaffiliated users and that kind of thing

-

Ge0rG

also you only want to know the total number of users and the first page full of them, the other ones aren't displayed anyway ;)

-

Zash

Yeah

-

flow

Zash> Soooo, presence based MUC is the problem yet again I think the fundamental design problem is pushing stanzas instead of recipients requesting them. Think for example a participant of a high traffic MUC using a low throughput connection (e.g. GSM). That MUC could easily kill the participants connection

-

Zash

You do request them by joining.

-

flow

Zash, sure, let me clarify: requesting them on smaller batches (e.g. MAM pagination style)✎ -

flow

Zash, sure, let me clarify: requesting them in smaller batches (e.g. MAM pagination style) ✏

-

Zash

You just described how Matrix works btw

-

flow

I did not know that, but it appears like one (probably sensible) solution to the flow control / traffic management problem we have

-

jonas’

or like MIX ;D

-

Ge0rG

let's just do everything in small batches.

-

flow

correct me if I am wrong, but MIX's default modus operandi is still to fan-out all messages

-

jonas’

I think only if you subscribe to messages

-

jonas’

also, I thought we were talking about *presence*, not messages.

-

flow

I think the stanza kind does not matter

-

flow

if someone sends you stanzas with a higher rate than you can consume some intermedidate queue will fill

-

jonas’

yeah, well, that’s true for everything

-

flow

hence I wrote "fundamental design problem"

-

jonas’

I can see the case for MUC/MIX presence because that’s a massive amplification (you send single presence, you get a gazillion and a continuous stream back)

-

jonas’

yeah, no, I don’t believe in polling for messages

-

Kev

The main issue is catchup.

-

jonas’

if you’re into that kind of stuff, use BOSH

-

flow

I did not say anything about polling

-

Kev

Whether when you join you receive a flood of everything, or whether you request stuff when you're ready for it, in batches.

-

Kev

Using MAM on MIX is meant to give you the latter.

-

flow

and yes, the problem is more likely caused by presence stanzas, but could be caused by IQs or messages as well

-

Kev

If you have a room that is itself generating 'live' stanzas at such a rate that it fills queues, that is also a problem, but is distinct from the 'joining lots of MUCs disconnects me' problem.

-

flow

Kev, using the user's MAM service or the MIX channel's MAM service?

-

Kev

Both use the same paging mechanic.

-

jonas’

12:41:06 flow1> Zash, sure, let me clarify: requesting them in smaller batches (e.g. MAM pagination style) how is that not polling then?

-

jonas’

though I sense that this is a discussion about semantics I don’t want to get into right now.

-

flow

right, I wanted to head towards the question on how to be notified that there are new messages that you may want to request

-

jonas’

by receiving a <message/> with the message.

-

flow

that does not appear to be a solution, as you easily run into the same problem

-

jonas’

[citation needed]

-

flow

I was thinking more along the lines of infrequent/slightly delayed notifications with the current stanza/message head IDs✎ -

Holger

MAM/Sub!

-

flow

I was thinking more along the lines of infrequent/slightly delayed notifications with the current stanza/message head ID(s) ✏

-

flow

but then again, it does not appear to be a elegant solution (or potentially is no solution at all)

-

Zash

Oh, this is basically the same problem as IP congestion, is it not?

-

Zash

And the way to solve that is to throw data away. Enjoy telling your users that.

-

Zash

> The main issue is catchup. This. So now you'll have to figure out what data got thrown away and fetch it.

-

Zash

(Also how Matrix works.)

-

eta

the one thing that may be good to steal from matrix is push rules

-

eta

i.e. some server side filtering you can do to figure out what should generate a push notification

-

Zash

Can you rephrase that in a way that doesn't make me want to say "but they stole this from us"

-

eta

well so CSI filtering is an XMPP technology, right

-

eta

but there's no API to extend it

-

eta

like you can't say "please send me everything matching the regex /e+ta/"

-

Zash

"push rules" meaning what, exactly?

-

pep.

Zash: it's just reusing good ideas :p

-

Zash

You said "push notifications", so I assumed "*mobile* push notifications"

-

Ge0rG

Zash: a filter that the client can define to tell the server what's "important"

-

Zash

AMP?

-

eta

Zash, so yeah, push rules are used for mobile push notifications in Matrix

-

Zash

Push a mod_firewall script? 🙂

-

Ge0rG

for push notifications, the logic is in the push server, which is specific to the client implementation

-

Zash

eta: So you mean user-configurable rules?

-

eta

Zash, yeah

-

Ge0rG

not rather client-configurable?

-

eta

I mean this is ultimately flawed anyway because e2ee is a thing

-

Zash

Everything is moot because E2EE

-

Ge0rG

I'm pretty sure there is no place in matrix where you can enter push rule regexes

-

pulkomandy

Is the problem really to be solved on the client-server link? What about some kind of flow control on the s2s side instead? (no idea about the s2s things in xmpp, so maybe that's not doable)

-

eta

Ge0rG, tada https://matrix.org/docs/spec/client_server/r0.6.1#m-push-rules

-

Zash

Ge0rG: Keywords tho, which might be enough

-

eta

you can have a "glob-style pattern"

-

Zash

Ugh

-

Ge0rG

eta: that's not what I mean

-

Ge0rG

eta: show me a Riot screenshot where you can define those globs

-

eta

Ge0rG, hmm, can't you put them into the custom keywords field

-

pulkomandy

If you try to solve it on client side you will invent something like tcp windows. Which is indeed a way to solve ip congestion. And doesn't work here because congestion on the server to client socket doesn't propagate to other links

- eta doesn't really care about this argument though and is very willing to just concede to Ge0rG :p

-

Zash

What was that thing in XEP-0198 that got removed? Wasn't that rate limiting?

-

Ge0rG

Zash: yes

-

eta

I think the presence-spam-in-large-MUCs issue probably needs some form of lazy loading, right

-

eta

like, send user presence before they talk

-

eta

have an API (probably with RSM?) to fetch all user presences

-

Zash

eta: Yeah, that's what I was thinking

-

eta

the matrix people had pretty much this exact issue and solved it the same way

-

Zash

Oh no, then we need to do it differently!!11!!11!!1 eleven

-

eta

Zash, it's fine, they use {} brackets and we'll use <> ;P

-

Zash

Phew 😃

-

eta

the issue with lots of messages in active MUCs is more interesting though

-

eta

like for me, Conversations chews battery because I'm in like 6-7 really active IRC channels

-

eta

so my phone never sleeps

-

eta

I've been thinking I should do some CSI filtering, but then the issue is you fill up the CSI queue

-

Zash

A thing I've almost started stealing from Matrix is room priorities.

-

Zash

So I have a command where I can mark public channels as low-priority, and then nothing from those gets pushed trough CSI

-

Ge0rG

eta: the challenge here indeed is that all messages will bypass CSI, which is not perfect

-

eta

Zash, yeah, there's that prosody module for that

-

Ge0rG

eta: practically speaking, you might want to have a wordlist that MUC messages must match to be pushed

-

eta

I almost feel like the ideal solution is something more like

-

eta

I want the server to join the MUC for me

-

eta

I don't want my clients to join the MUC (disable autojoin in bookmarks)

-

eta

and if I get mentioned or something, I want the server to somehow forward the mentioned message

-

Ge0rG

eta: your client still needs to get all the MUC data, eventually

-

eta

Ge0rG, sure

-

eta

but, like, I'll get the forwarded message with the highlight

-

eta

then I can click/tap on the MUC to join it

-

Ge0rG

eta: so CSI with what Zash described is actually good

-

eta

and then use MAM to lazy-paginate

-

eta

Ge0rG, yeah, but it fills up in-memory queues serverside

-

Ge0rG

eta: but I think that command is too magic for us mortals

-

Ge0rG

eta: yes, but a hundred messages isn't much in the grand scheme of things

-

eta

Ge0rG, a hundred is an underestimate ;P

-

eta

some of the IRC channels have like 100 messages in 5 minutes or something crazy

-

Holger

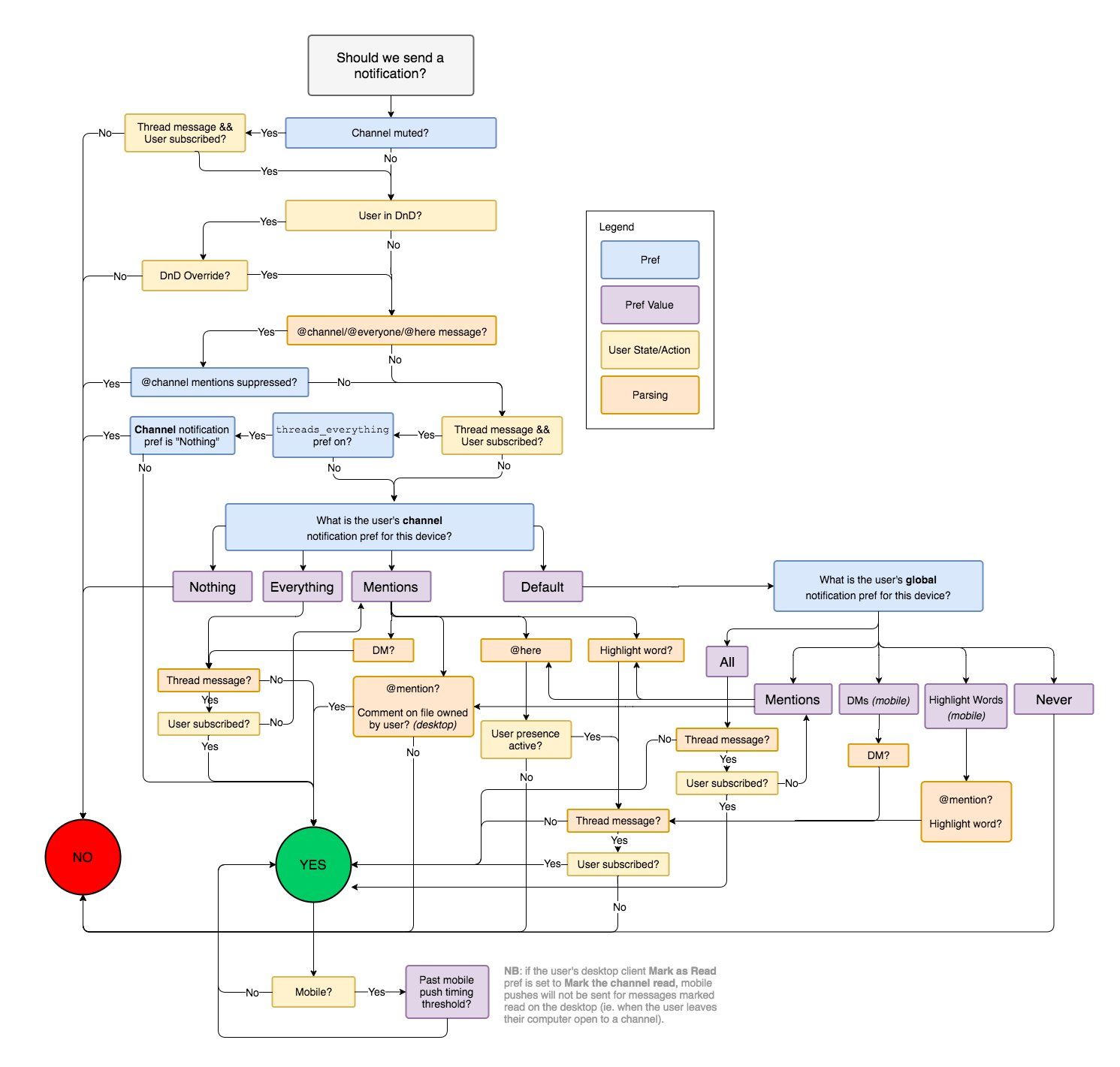

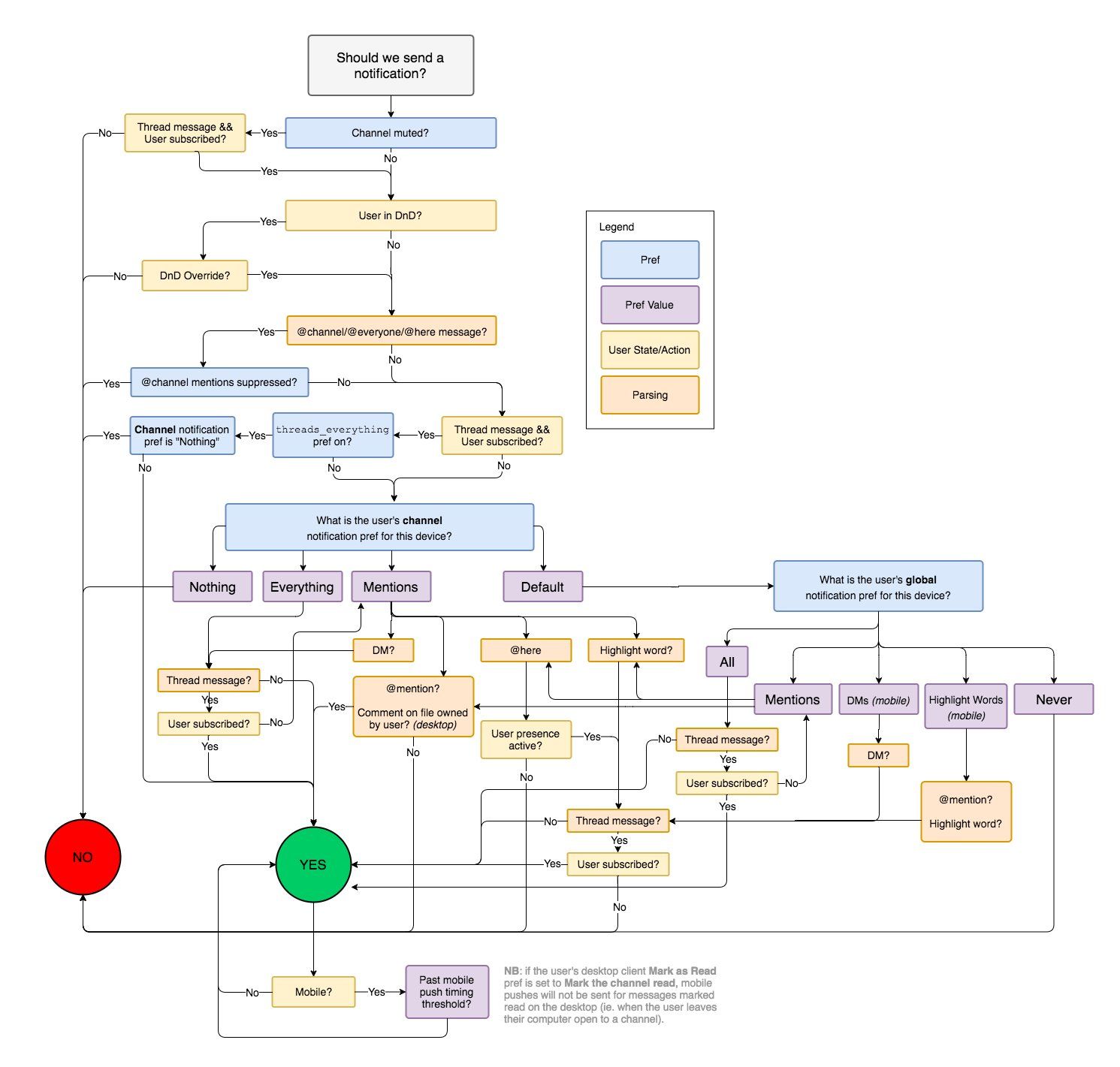

https://jabber.fu-berlin.de/share/holger/EuIflBOiuR0UyOtA/notifications.jpeg

-

Holger

C'mon guys this is trivial to solve.

-

Ge0rG

my prosody is currently consuming ~ 500kB per online user

-

Holger

https://jabber.fu-berlin.de/share/holger/aIlgwvzEMWv66zF9/notifications.jpeg

-

Holger

Oops.

-

eta

Zash, also ideally that prosody module would use bookmarks

-

eta

instead of an ad-hoc command

-

Ge0rG

eta: naah

-

Zash

Bookmarks2 with a priority extension would be cool

-

Ge0rG

we need a per-JID notification preference, like "never" / "always" / "on mention" / "on string match"

-

Ge0rG

which is enforced by the server

-

eta

Ge0rG: that's a different thing though

-

Ge0rG

eta: is it really?

-

Ge0rG

eta: for mobile devices, CSI-passthrough is only relevant for notification causing messages

-

eta

Ge0rG: ...actually, yeah, I agree

-

Ge0rG

you want to get pushed all the messages that will trigger a notification

-

Ge0rG

which ironically means that all self-messages get pushed through so that the mobile client can *clear* notifications

-

Ge0rG

which ironically also pushes outgoing Receipts

-

Ge0rG

eta: I'm sure I've written a novel or two on standards regarding that

-

Ge0rG

or maybe just in the prosody issue tracker

-

Ge0rG

eta: also CSI is currently in Last Call, so feel free to add your two cents

-

Zash

Ironically?

- Ge0rG isn't going to re-post his "What's Wrong with XMPP" slide deck again

-

Ge0rG

Also the topic of notification is just a TODO there.

-

Zash

Heh

-

Zash

> you want to get pushed all the messages that will trigger a notification and that's roughly the same set that you want archived and carbon'd, I think, but not exactly

-

eta

Ge0rG: wait that sounds like an interesting slide deck

-

eta

Zash: wild idea, just maintain a MAM archive for "notifications"

-

eta

I guess a pubsub node would also work

-

eta

and you shove all said "interesting" messages in there

-

Ge0rG

eta: https://op-co.de/tmp/whats-wrong-with-xmpp-2017.pdf

-

Zash

eta: MAM for the entire stream?

-

Zash

Wait, what's "notifications" here?

-

Zash

Stuff that causes the CSI queue to get flushed? Most of that'll be in MAM already.

-

eta

Zash: well mentions really

-

Ge0rG

eta: MAM doesn't give you push though

-

eta

Ge0rG: okay, after reading those slides I'd say that's a pretty good summary and proposal

-

Ge0rG

eta: all it needs is somebody to implement all the moving parts

-

Zash

Break it into smaller (no, even smaller!) pieces and file bug reports?

-

Zash

/correct feature requests*

-

Ge0rG

when I break it into this small pieces, the context gets lost

-

Ge0rG

like just now I realized there might be some smarter way to handle "sent" carbons in CSI, than just passing all through

-

Zash

One huge "do all these things" isn't great either

-

Ge0rG

but maybe a sent carbon of a Receipt isn't too bad after all because it most often comes short after the original message that also pierced CSI?

-

Ge0rG

did I mention that I'm collecting large amounts of data on the number and reason of CSI wakeups?

-

Zash

Possibly✎ -

Ge0rG

and that the #1 reason used to be disco#info requests to the client?

-

Zash

Possibly (re carbon-receipts) ✏

-

Zash

Did I mention that I too collected stats on that, until I discovered that storing stats murdered my server?

-

Ge0rG

I'm only "storing" them in prosody.log, and that expires after 14d

-

Ge0rG

but maybe somebody wants to bring them to some use?

-

Zash

disco#info cache helped a *lot* IIRC

-

Zash

I also found that a silly amount of wakeups were due to my own messages on another device, after which I wrote a grace period thing for that.

-

Zash

IIRC before I got rid of stats collection it was mostly client-initiated wakeups that triggered CSI flushes

-

Ge0rG

Zash: "own messages on other device" needs some kind of logic maybe

-

Ge0rG

like: remember the last message direction per JID, only wake up on outgoing read-marker / body when direction changes?

-

Zash

Ge0rG: Consider me, writing here, right now, on my work station. Groupchat messages sent to my phone.

-

Ge0rG

just waking up on outgoing read-marker / body would be a huge improvement already

-

Ge0rG

Zash: yes, that groupchat message is supposed to clear an eventual notification for the groupchat

-

Ge0rG

that = your

-

Zash

After the grace period ends, if there were anything high-priority since the last activity from that other client, then it should push.

-

Zash

Not done that yet tho I thkn

-

Zash

But as long as I'm active at another device, pushing to the phone is of no use

-

Zash

Tricky to handle the case of an incoming message just after typing "brb" and grabbing the phone to leave

-

Zash

Especially with a per-stanza yes/no/maybe function, it'll need a "maybe later" response

-

Ge0rG

Zash: yeah. Everything is HARD

-

eta

also for all slack's complicated diagrams their notifications don't even work properly either

-

eta

like it doesn't dismiss them on my phone, etc

-

flow

Zash> And the way to solve that is to throw data away. Enjoy telling your users that. I'd say that's where there is TCP on top of IP (where I'd argue, the actual congestion and traffic flow control happens)

-

Zash

flow: With TCP, same as XMPP, you just end up filling up buffers and getting OOM'd

-

flow

Zash, I don't think those two are realy comperable: with tcp you have exactly two endpoints, with xmpp one entity communicates potentially with multiple endpoints (potentially over multiple different s2s links)✎ -

flow

Zash, I don't think those two are realy comparable: with tcp you have exactly two endpoints, with xmpp one entity communicates potentially with multiple endpoints (potentially over multiple different s2s links) ✏

-

flow

Zash, I don't think those two are really comparable: with tcp you have exactly two endpoints, with xmpp one entity communicates potentially with multiple endpoints (potentially over multiple different s2s links) ✏

-

Zash

(me says nothing about mptcp)

-

Zash

So what Ge0rG said about slowing down s2s links?

-

flow

I did not read the full backlog, could to summarize what Ge0rG said?

-

flow

(otherwise I have to read it first)

-

Zash

13:31:21 Ge0rG "Holger, Zash: we could implement per-JID s2s backpressure"

-

flow

besides, arent in MPTCP still only two endpoints involved (but using potentially multiple paths)?✎ -

flow

besides, aren't in MPTCP still only two endpoints involved (but using potentially multiple paths)? ✏

-

flow

I am not sure if that is technically possible, the "per-JID" part here alone could be tricky

-

flow

it appears that implementing backpressure would likely involve signalling back to the sender, but what if the path the sender is also congested?

-

Zash

I'm not sure this is even doable without affecting other users of that s2s link

-

flow

as of now, the only potential solution I could come up with is keeping the state server side, and have servers notify clients that the state changes, so that clients can sync whenever they want, and especially how fast they want

-

flow

but that does not solve the problem for servers with poor connectivity

-

jonas’

let’s change xmpp-s2s to websockets / http/3 or whatever which supports multiple streams and will of course solve the scheduling issue of streams competing for resources and not at all draw several CVE numbers in that process :)

-

Zash

Not impossible to open more parallell s2s links...

-

jonas’

one for each JID? :)✎ -

jonas’

one for each local JID? :) ✏

-

Zash

Heh, you could open a secondary one for big bursts of stanzas like MUC joins and MAM ....

-

Zash

Like I think there were thoughts in the past about using a secondary client connection for vcards

-

jonas’

haha wat

-

Zash

Open 2 c2s connections. Use one as normal, presence, chat etc there. except send some requests like for vcards over the other one, since they often contain big binary blobs that then wouldn't block the main connection :)

-

pulkomandy

Well… at this point you may start thinking about removing tcp (its flow control doesn't work in this case anyway) and do something xml over udp instead?

-

Zash

At some point it stopped being XMPP

-

moparisthebest

QUIC solves this